Our Human-Assisted AI Development Framework for Production Systems

There's a gambling mentality spreading through AI-assisted development. Developers sit at their terminals like they're at a slot machine, pulling the prompt lever over and over.

"Just one more try." "Maybe if I phrase it differently." Three hours later, they've burned through tokens and patience, and the code still doesn't work the way they need it to.

We've watched this happen. We've done it ourselves. And we've learned that treating AI like a lottery ticket produces lottery-ticket results: occasionally you win, but the house always wins more.

The demos make it look easy. Someone types a prompt, code appears, the app works. What they skip is what happens when you need to maintain that code, debug it, or extend it six months later. The demo ends at "it works." Production begins there.

This article explains how we structure AI-assisted development for real projects. It builds on our AI principles with the practical methodology we use daily.

The Core Problem: Working Code Isn't Enough

AI can generate working code quickly. That part is solved.

The challenge is what happens after. You ask for a user editing interface. The AI builds it with a modal approach. It works. Then you realize modals have accessibility issues, so you switch to a list-detail-edit navigation pattern. The AI generates three separate views. They all work.

Then you open the code and find the same logic repeated in each file.

This happened to us recently. The feature worked. It was editing users, doing what it needed to do. But looking at the code, it was a mess. Low quality. The kind of code that makes you nervous to touch because you don't know what will break.

That's the pattern we see consistently.

The AI solves the immediate problem without considering how the solution fits into the larger system. It optimizes for "working" when you need "maintainable."

The AI Lifespan Principle

The shorter the useful life of what you're generating, the easier it is to generate with AI.

A one-time script that runs once and gets deleted? Pull the slot machine lever all you want. A campaign image that lives for a week before the next one replaces it? Speed matters more than craft. A screenshot for Twitter that gets likes for three days and disappears? AI handles that fine.

An application that users depend on, that will be maintained for years, that needs to evolve with changing requirements? That's different. That's alive. It's being iterated, modified, updated.

The vibe coding approach falls apart here because you're not generating a static snapshot. You're building something with longevity.

Treating AI as a Colleague

The mindset shift that changed everything for us: treat the LLM like a colleague, who you're guiding through your codebase.

You can't just say "make my sandwich" and expect production results. The autonomous coding demos aren't science fiction. They do work. The question is whether the result meets your standards for something you'll maintain.

When you don't care much about quality, security, or long-term liability, autonomous generation is fine. That describes prototypes and experiments, not production systems.

We use AI-assisted coding. It has a place in the process. It's great for designing screens, for quick prototypes to test ideas, and for exploring approaches before committing.

The problem is when people try to take that all the way to production without the intervening steps.

Our AI Development Methodology

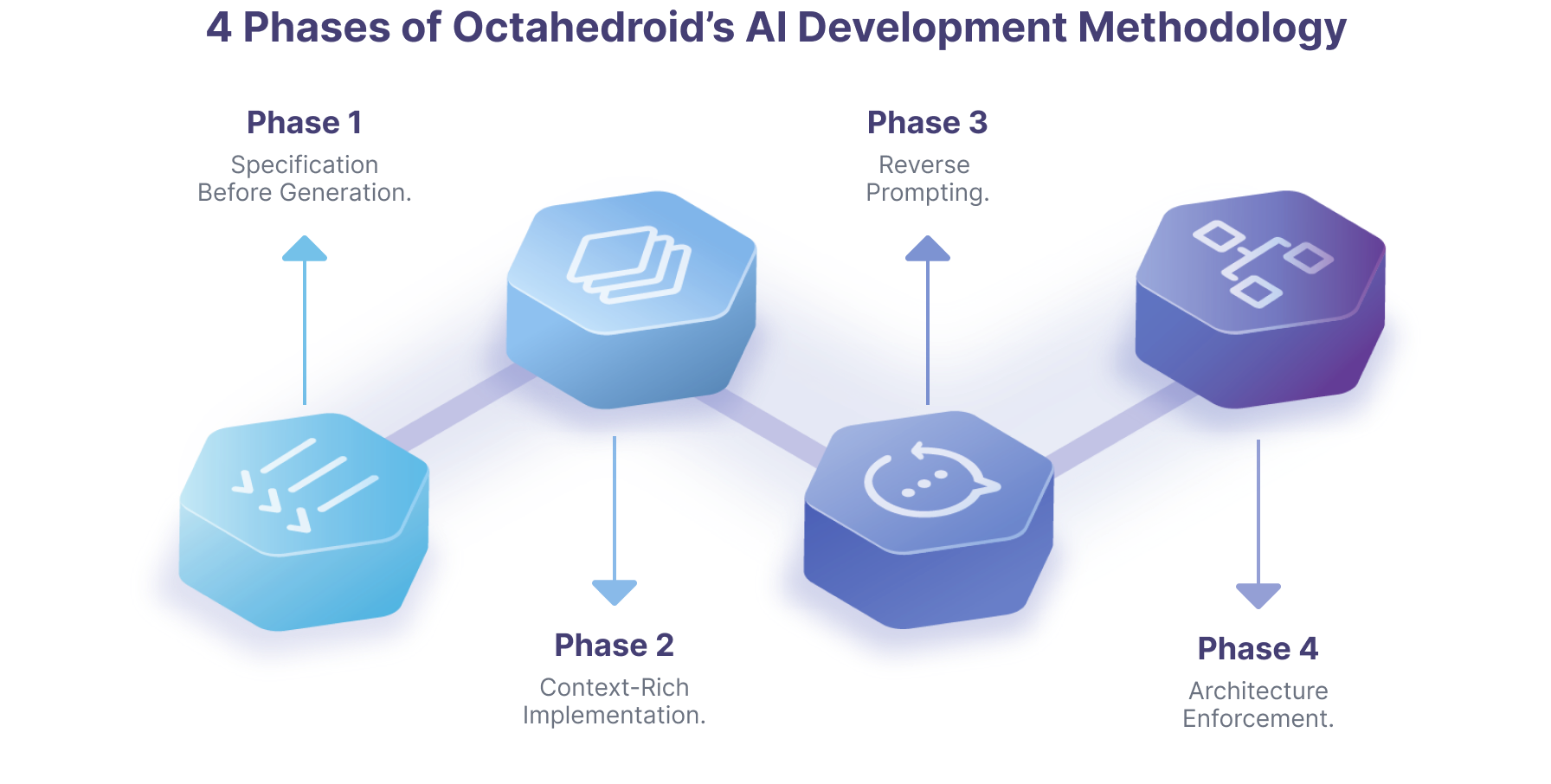

Our methodology has four phases.

The development model doesn't actually change much from traditional approaches. You still need planning. You still need specifications. You still need to break features into discrete pieces. The AI accelerates execution within that structure.

Phase 1: Specification Before Generation

We start with documentation, not prompting.

Before any AI interaction, we write technical specifications that define what we're going to build.

Business requirements, technical constraints, integration points, and success criteria. One of our specification documents for a single feature ran to over 1,600 lines. That sounds excessive until you realize the LLM processes those tokens in seconds and produces dramatically better output as a result.

A better specification forces clarity. If you can't explain what you're building in a document, you're not ready to build it. Projects that skip this phase consistently fail. The AI generates code, but the wrong code. You end up maintaining solutions that don't actually solve your problem.

Phase 2: Context-Rich Implementation

The quality of AI output relies more on context than clever prompting.

We feed the AI everything relevant: previous project patterns, code style guidelines, examples of similar implementations, documentation of business logic. The conversation matters more than any single prompt.

This is where the colleague metaphor becomes practical. You're collaborating with something that needs guidance. You lead it through what needs to happen, step by step, correcting course as you go.

When working with less common frameworks, the AI's knowledge gaps become obvious. The major LLMs know Next.js thoroughly because there's extensive documentation and examples across the internet. Try newer frameworks without massive adoption, (frameworks that aren't bad, just less documented in training data) and you'll notice the difference.

Tools like Context7, Deepwiki, and Augments help by organizing documentation into searchable knowledge bases the AI can reference. But the fundamental approach stays the same: comprehensive context produces quality output.

Phase 3: Reverse Prompting

This is where our methodology differs most from typical AI coding workflows.

After the AI generates working code, we analyze what it built and extract the patterns. We call this reverse prompting.

Here's how it works. You've had a long conversation building a feature. The code works. Now you tell the AI: "Based on everything we just discussed, create a set of rules, patterns, and practices that we followed."

The AI synthesizes the conversation into concrete guidelines. Those guidelines get integrated into your project's context files. When you work on similar features later, the AI has documented patterns to follow.

Going back to the user editing example: after refactoring to extract the repeated logic into a shared location, we asked the AI to document what we'd done. Those extracted rules now inform future similar features. When it needs functionality in multiple places, it puts it in one place and calls it. The AI won't always remember on its own, but with the rules in context, it has the intention to follow them.

This phase also surfaces accumulated mess. Throughout development, you create temporary workarounds and experimental approaches. Extracting patterns forces you to confront what needs to stay versus what was scaffolding. Those obsolete prompts piling up? Eventually you have to clean them out.

Phase 4: Architecture Enforcement

AI-generated code tends toward tight coupling. Data connects directly to the UI. References get flattened. The quick solution wins over the maintainable one.

We enforce separation between data structures and presentation logic regardless of the technology stack. Your data speaks data language: references, IDs, normalized structures. Your components speak UI language: display values, layout, interaction. A layer between them handles the translation.

Here's why this matters. Vibe-coded applications often generate spreadsheet-style data structures. Everything is flat, all values are inline, easy to manipulate directly.

For a prototype, that’s fine. For production, changing a user's name means updating every row where it appears instead of updating it once and letting references handle the rest.

We can even work with mocked APIs during development. Build the frontend against a fake generated backend, knowing that once the real API exists, we just swap the connection layer. The components don't change. That flexibility comes from enforcing the separation.

What This Looks Like in Practice

The methodology sounds straightforward in description. The reality involves iteration and course correction.

We've changed the entire technology stack during development on some projects. Started with one approach suggested by the client, realized it didn't fit, pivoted to something else, then pivoted again. That's normal. The methodology makes iteration productive.

The specification documents evolve as understanding deepens. The extracted rules accumulate over time, making each subsequent feature easier to build correctly. The context repositories grow more valuable with each project.

| Phase | Primary Activity | Output |

| Specification | Document requirements and constraints. | Technical specification document. |

| Implementation | Guided AI conversation with comprehensive context. | Working code matching specifications. |

| Reverse Prompting | Extract patterns from successful implementation. | Rules and guidelines for future work. |

| Architecture | Enforce separation and refactor coupling. | Maintainable, evolvable codebase. |

Desmystifying the AI Development Hype

The tools aren't as advanced as the "Hollywood-style" demos suggest. The demos are impressive. But they're optimized for showing capability in controlled conditions.

If you don't care much about quality, security, or liability, the tools work great for generating code. For production systems where those things really matter, you need the human in the loop. The human's job is either to do the work, to improve what the AI did, or to tell the AI how to do it properly. That's the reality.

AI keeps evolving. It's getting better at planning, documentation, and execution with each model release. But that evolution doesn't eliminate the need for human involvement. It changes what humans focus on.

Today you might review every function. Tomorrow you might review architectural decisions while the AI handles implementation details. The human in the middle isn't going away. It's moving up the abstraction ladder.

We're not against AI. We use it constantly. We're against the slot machine mentality of pulling the prompt lever hoping for a jackpot. That's not engineering. That's gambling.

The framework we've described here is how we get consistent results. Specifications before prompts. Context over cleverness. Extracting patterns from success. Enforcing architecture that allows evolution.

What Comes Next for AI Development

This article covers the overall methodology. We're planning detailed explorations of each phase with concrete examples from actual implementations.

The specification phase alone has enough nuance for its own article: how we structure documents, what level of detail works, how specifications evolve during development. The reverse prompting process has patterns we've refined through repeated use. Architecture enforcement looks different across technology stacks.

Those details will come in subsequent articles over the coming weeks. For now, this is the framework we use and why we use it.

Want to discuss how this applies to your situation? Contact us for a free consultation. We'll help you evaluate whether this methodology fits your needs.

About the author

Related posts

Astro vs Next.js vs Remix (React Router): Static Site Generators Comparison in 2026

By Ezequiel Olivas, December 19, 2025Compare Astro, Next.js, and React Router (formerly Remix) across performance, rendering strategies, and enterprise requirements. Learn which framework fits your team's capabilities and content architecture.

Our Human-Assisted AI Development Framework for Production Systems

By Jesus Manuel Olivas, December 18, 2025How we structure AI-assisted development for production systems. A methodology built from real project work that treats AI as a capable colleague who needs guidance, not a slot machine to pull until something works.

Take your project to the next level!

Let us bring innovation and success to your project with the latest technologies.